“Politics aside, there are 400 million users of the Office Open format, and we basically just recognized reality.” This quote by the retired Secretary General of Ecma, Jan van den Beld, explaining why it is so important to standardize OOXML.

Anyone else want to recognize reality? Maybe I can help.

Two questions to consider: 1) What is the actual state of OOXML adoption? and 2) What influence should market adoption of a technology have on its standardization?

On the first question, we should note that the 400 million users figure quoted by vdBeld in no way concerns OOXML. That figure is merely Microsoft’s estimate of the total number of Microsoft Office users, of all versions, world wide. Only a small percentage of them are using OOXML.

Let’s see if we can estimate the number.

How are Office 2007 sales? One (leaked) estimate (in September) was 70 million. But a follow-up statement makes it clear this is total Office licenses sold, of all versions. This is probably on the high end, not indicating installations, or even real end sales, since Microsoft typically reports sales into the channel. So that number must be reduced by some factor to account for real installations.

What percentage of Office users are running Office 2007? Joe Wilcox quotes Gartner, saying “Our Symposium survey showed Office at greater than 10 percent installed base…”

And not every Office 2007 will use the default OOXML formats. I’ve heard that corporate installations are often choosing to change their configuration to default to Compatibility Mode, so that Office 2007 saves in the legacy binary formats, for the increased interoperability this offers.

How does this net out? Something more than 40 million and less than 70 million seems the right neighborhood.

Let’s look for some more data points.

Take the example of OpenOffice, which has has seen over 100 million downloads, not including copies which are included already with Linux distributions. So I believe there are far more OpenOffice users than Office 2007 users. Of course, not all OpenOffice users save in ODF format. Some will change the defaults to use the legacy Microsoft binary formats.

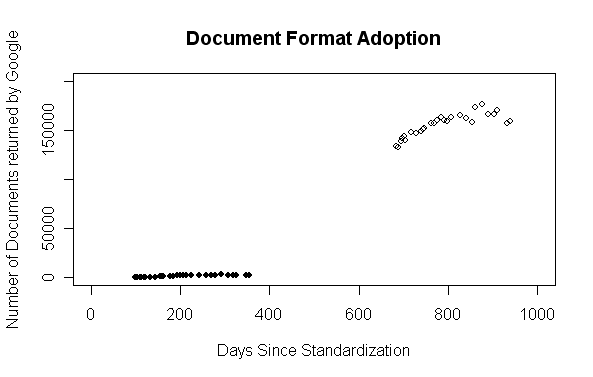

Let’s take a look at an updated version of a chart I made back in May, with data now current through 11/27/2007.

The data here shows the number of documents reported by Google over time for ODF and OOXML documents. Hollow circles are ODF data points; solid circles are OOXML data points. (Yes, I need to figure out how to do scatterplot legends in R) The X-axis does not show the date. That would not be fair, since ODF had a significant head start in standardization and adoption. So in order have a fair comparison, both formats are shown against to the number of “days since standardization”, which is May 1st, 2005 for ODF, and December 7th, 2006 for OOXML, the days the formats were approved by OASIS and Ecma respectively.

Next week is the one year anniversary of Ecma’s approval of OOXML as an Ecma Standard. The news is not good. There are fewer than 2,000 OOXML documents on the entire internet (as indexed by Google at least) and the trend is flat.

What about ODF? Almost 160,000 and growing strongly.

Now we shouldn’t be so careless as to say that there are only 2,000 OOXML document in existence, or for that matter only 160,000 ODF documents. Not all documents are posted on the web. In fact, most of them are sitting on hard drives, in mail files, behind corporate firewalls, etc. The documents that Google sees is only a sampling of real-world documents. But this is true of both ODF and OOXML. My hard drive is loaded with ODF documents that are not included in the above sampling. But however you spin it, the minuscule number of OOXML documents and their pathetic growth rate should be a cause of concern and distress for Microsoft.

Where are all the OOXML documents? What governments have adopted OOXML? What agencies? What major companies? If there was an adoption bigger than a Cub Scout pack we would have heard it trumpeted all over the headlines. Listen. Do you hear anything? No. The silence speaks volumes.

But for sake of argument, what if the numbers were different? What if there were millions of documents on the web in OOXML format? Would that have any relevance to the JTC1 standardization process? The answer is a clear “No”. Market share, or even market domination, is not a criterion. In the US NB, INCITS, we are required to make our decision based on “objective technical factors”. Making a decision to favor a proposed standard because of the proposer’s market share would bring antitrust risks.

Consider this: In JTC1 we vote. One country one vote. We do not vote based on a nation’s GDP. Jamaica and Japan are equal in ISO. We have engineers review the standards. We do not bring in accountants to review financial statements and verify inventories. If we want to make decisions based on market share then we should scrap JTC1 altogether and hand standardization over to revenue department authorities to administer.

But that would then perpetuate a technological neo-colonialism where the developed world controls the the patents, the capital and the standards, and the rest of the world licenses, pays and obeys. There’s the rub. Where standards are open, consensually developed in a transparent process and made available to all to freely implement, there we lower barriers to implementation, level the playing field and allow all nations of the world to compete based on their native genius. But where standards are bought we end up with bad standards and a worse world for it.