I noticed a curious argument in Jonathan Corbet’s LWN article “Supporting OOXML in LibreOffice” (behind a pay wall). Why should we support OOXML?

…as has been pointed out in the discussion, Microsoft will, someday, phase out support for its (equally proprietary) DOC format, leaving OOXML as the only real option for document interchange. There appears to be little hope that Microsoft’s ODF support will be sufficient to make ODF a viable alternative. So any office productivity suite which aspires to millions of users, and which does not support OOXML, will find itself scrambling to add that support when DOC is no longer an option. It seems better to maintain (and improve) that support now than to be rushing to merge a substandard implementation in the future.

Really? The same company that is unable to fix a leap-year calculation bug from 20 years ago because of fears it might break backwards compatibility is going to remove support for their binary formats? Seriously, is that what people are saying? This sounds like something Microsoft would say to scare people into migrating.

But don’t listen to my opinions. Let’s look at the numbers. I’ve been tracking document counts via Google for almost four years now, looking at the relative distribution of document types, across OOXML, ODF, Legacy Binary, PDF, XPS, etc. Because the size of the web is growing, one cannot fairly compare the absolute numbers of documents from week to week. But the distribution of documents over time is something worth noting.

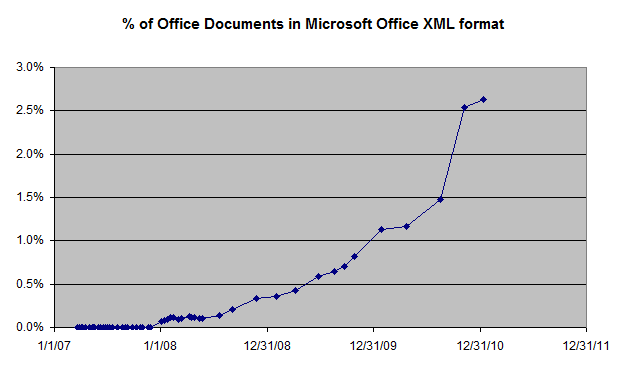

The following chart shows the percentage of documents on the web that are in OOXML format, as a percentage of all MS Office documents. Note carefully the scale of the chart. It is peaking at less than 3%. So 97+% of the Microsoft Office documents on the web today are in the legacy binary formats, even four years after Office 2007 was released.

Of course, for any given organization these numbers may vary. Some are 100% on the XML formats. Some are 0% on them. If you look at just “gov” internet domains, the percentage today is only 0.7%. If you look at only “edu” domains, the number is 4.5%. No doubt, within organizations, non-public work documents might have a different distribution. But clearly the large number of existing legacy binary documents on government web sites alone is sufficient to prove my point. DOC is not going away.

I call “FUD” on this one.