I suppose the downside of a blog post containing only a picture is that there is nothing for anyone to quote. So here are a few themes that struck me while putting this chart together:

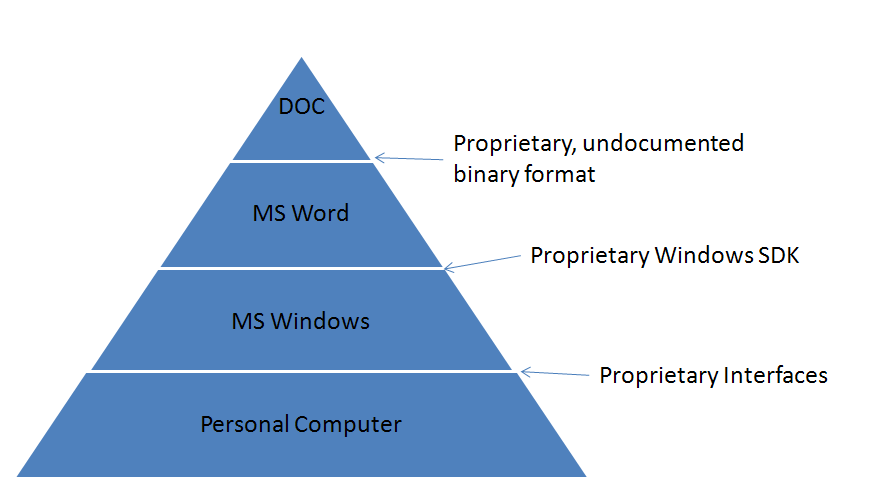

- Microsoft once made file format information on the binary formats readily available, in fact encouraged programmers to use the binary formats. But then around 1999 they reversed course, and eliminated such documentation. At the time, working at Lotus, I had no idea what motivated this change. It was only years later, when Microsoft internal memos were released in cases like Comes v. Microsoft, that the full picture emerged. The file format was viewed by Microsoft as a strategic tool, used to support the overall Microsoft platform, not the user. The format was designed to preserve their vendor lock-in. The availability of the file format documentation to competitors was limited, as a matter of corporate policy.So this reminds us that just because something is documented and available today does not prevent Microsoft from changing their mind at a later point and removing the documentation, failing to update it with new releases, or making it available only under a more restrictive license. Since Ecma owns the OOXML specification, as well as the future maintenance of it, any belief in the long-term openness of this format depends on your trust of Microsoft’s future behavior in this area.

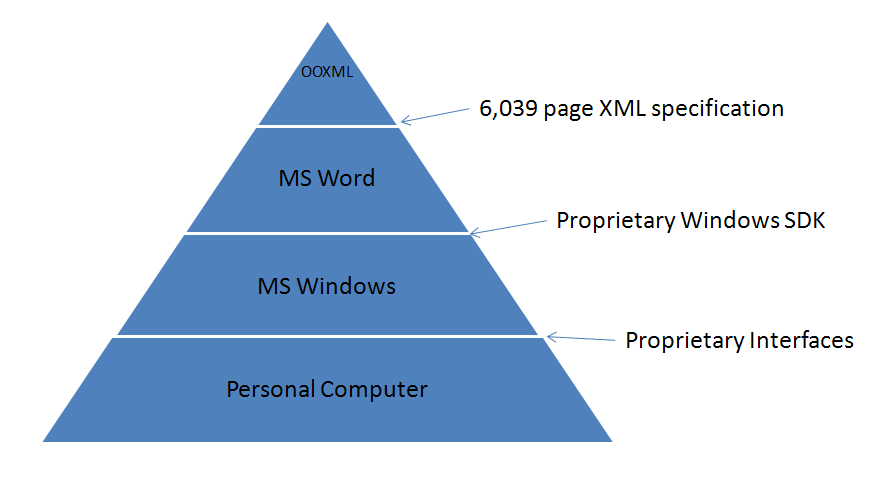

- Like any durable goods monopoly (and few things are as durable as software) Microsoft’s largest competitor is their own install base. Microsoft has made many attempts at moving beyond the binary formats in the past, with Office 2000, Office XP and Office 2003. But in each case it failed. These were all false starts and abandoned attempts. So we should look for signs that OOXML is actually Microsoft’s real direction and not another false start or dead end. My guess is that OOXML is merely a transitional format, much like Windows ME was in the OS space, a temporary hybrid used to ease the transition from 16-bit to the 32-bit platform that would eventually come (Windows 2000). Microsoft doesn’t want to support all of the quirks of their legacy formats forever. That just leads to bloated, fragile code, more expensive development and support costs. They would rather have clean, structured markup, like ODF. But the question is, how do you get there? The answer is straightforward: First, eliminate the competition. Second, move users in small steps, promising the comfort of continuity and safety. Third, once you have eliminated competition and have the users on the OOXML format that no one but Microsoft fully understands, then you may have your will of them. For example, introduce a new format that drops support for legacy formats and force everyone to upgrade. They are pretty much doing this already on the Mac by dropping support for VBA in the next version of the Mac Office.Even a cursory look at OOXML shows that it was not designed for long-term use, even by Microsoft. So the question I have is, what is the real format that they are going toward?

- Microsoft, after pretty much ignoring document standards for over a decade, suddenly got religion in late 2005 and rushed whatever they had on hand into Ecma. Remember, just months earlier they had recommended the Office 2003 Reference Schemas to Massachusetts for official use. I’m certainly glad Massachusetts did not fall for that by putting their resources on another dead format in the Microsoft format graveyard. OOXML was not designed to be a standard. It is just a proprietary specification that Microsoft has dumped, at the last minute, into ISO’s lap, in an attempt to translate their market domination into a standards imprimatur in order to further cement their market domination. It is a win-win situation for them. Either they have a effective monopoly in office applications and an ISO standard, or they have an effective monopoly in office applications. Nice situation for them either way.