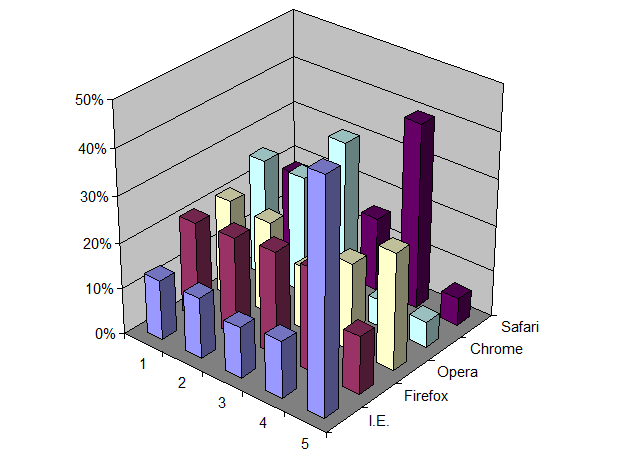

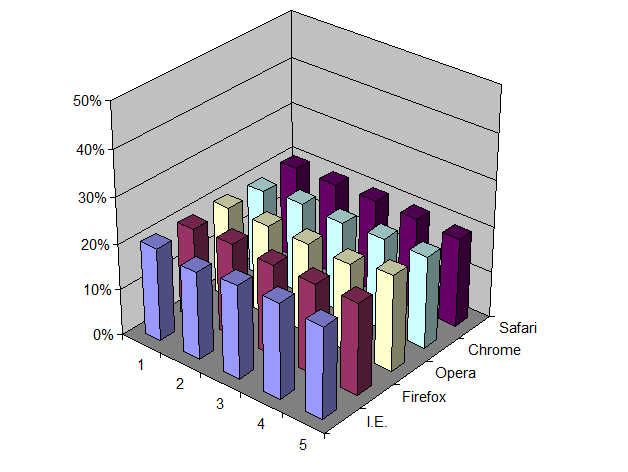

A few weeks ago I wrote about Microsoft’s “browser choice” ballot page in Europe, which in its debut used a flawed algorithm when attempting to perform a “random shuffle” of the browser choices, a feature specifically called for in their agreement with the EU. This bug was fixed soon after it was reported. But I recently received an email from a correspondent going by the name “Skoon” who reported a more serious bug, but one that is seen only in the Polish-language translation of the ballot choice screen.

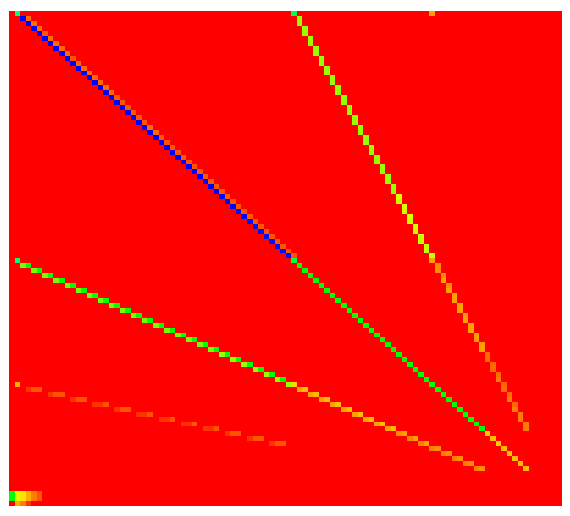

You can go directly to this version of the page via this URL www.browserchoice.eu/BrowserChoice/browserchoice_pl.htm. Try loading it a few times. Does it look random to you? I tried it in Internet Explorer, Firefox, Chrome and Opera and get the same result each time. The order is unchanging, with Internet Explorer always first, followed always by Firefox, Opera, Chrome and Safari, in that order. There is no shuffling going on at all.

I won’t bore you with the details of why this is so. Let’s just say that this is a JavaScript error involving a failure to properly escape embedded quotations in one of the browser descriptions. Because of the error, the script aborts and the randomization routine is never called.

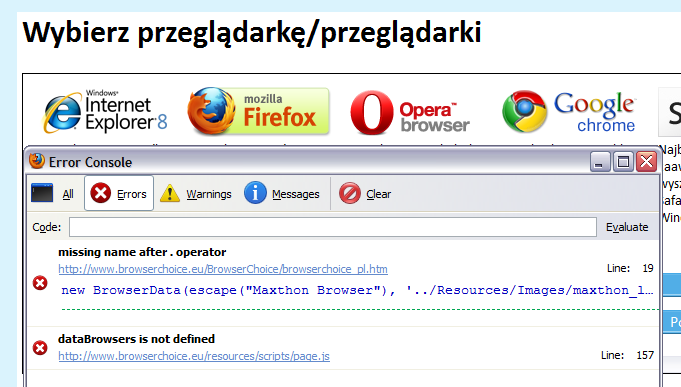

See if you can find the bug. Hint: turn on your JavaScript error checking in your browser (e.g., Tools\Error Console in Firefox) and the error will pop out immediately:

If you can detect this error in 30 seconds by enabling Internet Explorer’s own JavaScript error detection facility — and I believe you can — then we can assume that anyone could have done this, even Microsoft. The odd thing is that evidently no one at Microsoft bothered to check this page for JavaScript errors, or even check the page to see if it actually worked. We’re not talking about sophisticated statistical testing here. Any QA on the page, any at all, would have found this error.