“Politics aside, there are 400 million users of the Office Open format, and we basically just recognized reality.” This quote by the retired Secretary General of Ecma, Jan van den Beld, explaining why it is so important to standardize OOXML.

Anyone else want to recognize reality? Maybe I can help.

Two questions to consider: 1) What is the actual state of OOXML adoption? and 2) What influence should market adoption of a technology have on its standardization?

On the first question, we should note that the 400 million users figure quoted by vdBeld in no way concerns OOXML. That figure is merely Microsoft’s estimate of the total number of Microsoft Office users, of all versions, world wide. Only a small percentage of them are using OOXML.

Let’s see if we can estimate the number.

How are Office 2007 sales? One (leaked) estimate (in September) was 70 million. But a follow-up statement makes it clear this is total Office licenses sold, of all versions. This is probably on the high end, not indicating installations, or even real end sales, since Microsoft typically reports sales into the channel. So that number must be reduced by some factor to account for real installations.

What percentage of Office users are running Office 2007? Joe Wilcox quotes Gartner, saying “Our Symposium survey showed Office at greater than 10 percent installed base…”

And not every Office 2007 will use the default OOXML formats. I’ve heard that corporate installations are often choosing to change their configuration to default to Compatibility Mode, so that Office 2007 saves in the legacy binary formats, for the increased interoperability this offers.

How does this net out? Something more than 40 million and less than 70 million seems the right neighborhood.

Let’s look for some more data points.

Take the example of OpenOffice, which has has seen over 100 million downloads, not including copies which are included already with Linux distributions. So I believe there are far more OpenOffice users than Office 2007 users. Of course, not all OpenOffice users save in ODF format. Some will change the defaults to use the legacy Microsoft binary formats.

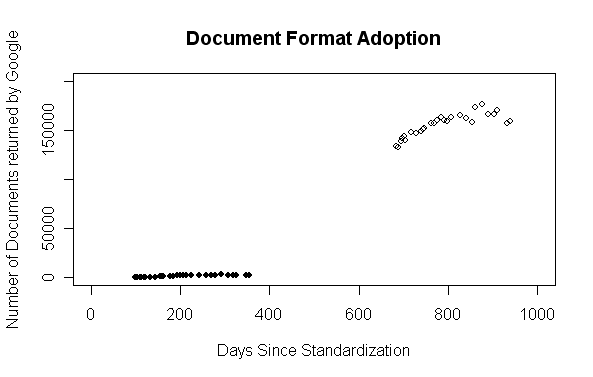

Let’s take a look at an updated version of a chart I made back in May, with data now current through 11/27/2007.

The data here shows the number of documents reported by Google over time for ODF and OOXML documents. Hollow circles are ODF data points; solid circles are OOXML data points. (Yes, I need to figure out how to do scatterplot legends in R) The X-axis does not show the date. That would not be fair, since ODF had a significant head start in standardization and adoption. So in order have a fair comparison, both formats are shown against to the number of “days since standardization”, which is May 1st, 2005 for ODF, and December 7th, 2006 for OOXML, the days the formats were approved by OASIS and Ecma respectively.

Next week is the one year anniversary of Ecma’s approval of OOXML as an Ecma Standard. The news is not good. There are fewer than 2,000 OOXML documents on the entire internet (as indexed by Google at least) and the trend is flat.

What about ODF? Almost 160,000 and growing strongly.

Now we shouldn’t be so careless as to say that there are only 2,000 OOXML document in existence, or for that matter only 160,000 ODF documents. Not all documents are posted on the web. In fact, most of them are sitting on hard drives, in mail files, behind corporate firewalls, etc. The documents that Google sees is only a sampling of real-world documents. But this is true of both ODF and OOXML. My hard drive is loaded with ODF documents that are not included in the above sampling. But however you spin it, the minuscule number of OOXML documents and their pathetic growth rate should be a cause of concern and distress for Microsoft.

Where are all the OOXML documents? What governments have adopted OOXML? What agencies? What major companies? If there was an adoption bigger than a Cub Scout pack we would have heard it trumpeted all over the headlines. Listen. Do you hear anything? No. The silence speaks volumes.

But for sake of argument, what if the numbers were different? What if there were millions of documents on the web in OOXML format? Would that have any relevance to the JTC1 standardization process? The answer is a clear “No”. Market share, or even market domination, is not a criterion. In the US NB, INCITS, we are required to make our decision based on “objective technical factors”. Making a decision to favor a proposed standard because of the proposer’s market share would bring antitrust risks.

Consider this: In JTC1 we vote. One country one vote. We do not vote based on a nation’s GDP. Jamaica and Japan are equal in ISO. We have engineers review the standards. We do not bring in accountants to review financial statements and verify inventories. If we want to make decisions based on market share then we should scrap JTC1 altogether and hand standardization over to revenue department authorities to administer.

But that would then perpetuate a technological neo-colonialism where the developed world controls the the patents, the capital and the standards, and the rest of the world licenses, pays and obeys. There’s the rub. Where standards are open, consensually developed in a transparent process and made available to all to freely implement, there we lower barriers to implementation, level the playing field and allow all nations of the world to compete based on their native genius. But where standards are bought we end up with bad standards and a worse world for it.

As a vendor of a spreadsheet component (supporting both new and old formats natively), I’ll second that there is ZERO demand for new file formats (xlsx). I’ve been supporting it for one whole year, so the figures mean something. The only demand I have had so far is for incompatibility fixes of the compatibility mode : it’s got to be told that Microsoft actually made stricter their BIFF reading module in Excel 2007 and as a result a ton of non-Microsoft vendors out there have had to update their software (so that .xls files open well in Excel 2007, even though they do well already in older Excel versions).

When it comes to market share, I think it’s important to be reminded that no matter how Microsoft made sure the transition to new the file format would be as seamless as possible (for that, they pushed a Windows Update, and released an update in Excel 2003 service pack 2 with dialog boxes dedicated to such scenarios, self-serving again), Office 2007 uses a new user interface which requires full retraining. Hard not to understate how big a deal it is. The ribbon is just the worst user interface ever, so I’m not surprised of the slow adoption.

When it comes to file formats, it’s also interesting to note that regular Office developers, who were using VBA macros so far, still have no reason to make a change, and therefore have no reason to upgrade to Excel 2007 VBA macros, which is one of the things which hasn’t changed at all (for more than ten years now. Note that the maintenance of VBA is not even done in Redmond for a number of years, it’s a consulting firm called SummSoft). If Office developers were to directly access the file format, they would automatically lose the application’s runtime updates whenever changes are made to cells and other objects, without which the spreadsheet becomes corrupt and/or inconsistent (examples of that was in my article: OOXML is defective by design). And that too is a big deal.

Custom XML? This is positioned to be an important feature from the marketing people at Microsoft (you can read their blogs and marketing brochures) : unfortunately, there is no way to bind a Custom XML part to an Excel table or list. Unlike Word 2007, there is no such thing as “content controls”. So the claimed features don’t even exist.

Even though Microsoft wants you to store XML inside files, because that obviously increased the lock-in and it’s $good$ for them, the inability to do anything with it in Excel 2007 is just the result of the mediocrity of their engineers.

Last but not least, the OOXML community. If you head over to openxmldeveloper.org (owned by Microsoft, self-serving here), you’ll quickly realize there is no such thing as a community there. Traffic is extremely low, and in fact the only person answering trivial questions is a consultant paid by Microsoft (Sonata software). Once again, we have Microsoft paying non-Microsoft people to create a false sense of enticement. It’s particularly ironic when you know that Microsoft often boasts the “more than 300,000 Office developers out there”. Indeed the usenet newsgroups are trafficked, but they are not in the one and only OOXML related website. That leaves the question open, where are they then? If OOXML is so good, how come no one seems to use it?

-Stephane Rodriguez

Legends in R plot (very simple example, you have to adapt it):

x <- c(seq(100, by=10, length=20), seq(700, by=15, length=20)) y <- c(rnorm(20, 1000, 100), rnorm(20, 150000, 10000)) f <- as.factor(c(rep("ooxml", 20), rep("odf", 20))) plot(y ~ x, pch=as.numeric(f)) legend(800, 50000, c(“OOXML”, “ODF”), pch=1:2)

regarding R graphics, just check out the ggplot2 package and pimp your plots. really easy!

It’s not really fair to play down the ’70 million sales’ of MS Office, but play up the ‘100 million downloads’ for OpenOffice, since both numbers are equally fishy.

The correlation between downloads and use is vague at best – for example, a single download might go to a disk image that’s replicated across a company. Or, like Firefox, most downloads mightn’t translate to any users at all.

Similarly, a figure of ’70 million sales’ that comes filtered through two journalists that don’t speak the same language, not to mention the internal Microsoft grapevine, could originally have had any number of caveats. For example, the number might refer to Europe alone. Also, the number of legal sales completely ignores the substantial number of people who pirate Microsoft software.

Incidentally, these criticisms make your chart all the more important to keep maintaining. While there are plenty of criticisms that could be levelled at it, at least it’s a direct measure of file format use (rather than application sales), with roughly comparable measures for both formats.

– Andrew

It would be interesting to compare the amount of doc/xls files in the web alongside with odf/ooxml..

Thanks for the R tips. I’m trying out the ggplot2 package. Some interesting stuff there. I’m convinced that R is one of the greatest open source application ever made, making available for free the kind of power that you used to pay $20,000+ for.

@Andrew, if the Office 2007 numbers were better, we would have heard. Microsoft hypes things so much that no good news would go unreported. But the 400 million overall numbers seems to be something they back for an estimate of overall adoption. That is probably why vdBeld used it, though mistakenly implied it applied to OOXML.

There is one way to figure this out, but it would require some work. Spider the web, download a random sample of 100,000 or so Office documents, parse them using Apache POI or something like that. Figure out the last save date as well as what application version saved the file (Office 2000 versus Office XP, etc.) From that you could figure out, for that collection of documents, what the mix of Office versions were over time.

@Anonymous, as for binary/legacy documents, the numbers are quite high. Even greater for PDF. So PDF is 5x more popular than the legacy office formats, and the legacy formats are 400x more popular than ODF, and ODF is around 100x more popular than OOXML.

Of course, the legacy formats and PDF have been around a lot longer than ODF or OOXML. What you would really want for a fair comparison is to know the numbers for documents created since, say January 1st, 2007, what is the breakdown. What % of new documents in are XML formats versus legacy binaries. To answer this would require a bit more work.

So are we at the point of ‘discontinuous change’ ?

It’s happened a few times before in related fields.

Punch-card readers got better and better, but then got rapidly obsoleted by paper-tape readers.

Magnetic core memory got better and better; but nowadays no-one makes it, and the price is very different depending on whether you’re trying to sell some surplus, or buy some to maintain an old-but-vital chemical plant process control computer.

Typewriters got better and better, but then got rapidly obsoleted by Personal Computers.

ATT rental telephone handsets got better and better, but who rents a fixed-line telephone handset from ATT now ?

VHS videotapes and tape decks got better and better, but all the movies come out on DVD now. Does anyone buy VHS ?

Are Microsoft’s formats getting better and better, but going into obsolescence because of open formats centred around ISO26300 and OpenOffice.org ?

Is is time to segment the market ? For all who can jump off the One Microsoft Way to do just that ?

Google’s search can translate .odt files into HTML, but if you look at the .docx rendition you essentially get a zip file directory listing.

Given that Google doesn’t index .docx files yet, how sure are you that they’re bothering to list all the .docx files they find in their index?

I don’t particularly doubt that .docx files are less common, particularly since there is much less reason to post Office files in the new format, but I do wonder how accurate your figures are. In particular, there’s no sense of what the margin of error is – maybe you could ask someone at Google to comment on that?

I think this shows that Google is depressing numbers on certain filetypes or has a very inefficient file detection system:

Using Live search I find much higher numbers:

64100 DOCX files

http://search.live.com/results.aspx?q=contains%3Adocx&go=Zoeken&mkt=us-us

253000 ODT files

http://search.live.com/results.aspx?q=contains%3Aodt&go=Zoeken&mkt=us-us

This however is not entrily acurate as a lot of the exisiting .DOCX files are served via ASP pages which do not accuratly list in this kind of searches.

To an extent, all the word processing formats are really working formats; if the output is PDF, HTML or printed text, you shouldnt expect to see the formats on the web

What may be possible is to estimate what percentage of PDF pages are actually created by OOo or Word 2007 rather than by acrobat or pdfcreator. You’d take a sample of the PDFs and look inside to see what tool built them. If you can create a query that includes dates (all PDFs from oct 2007) then take a sample of those, you could see how the percentage changed.

It would be hard to do this, except for a search engine company tthat already indexed PDFs, such as yahoo, google, and, um, microsoft.

The Live Search query you use is returning pages that link to a file of a particular type. The Google query I used is returning the actually document. These are two very different things, right? A single file may be linked to from multiple HTML pages and Live Search will count it multiple times. The file might not even exist, even though there is a link in a page, and Live Search would count it.

I’d note that applying this same Live Search technique to ODF files results in increasing ODF numbers around 10-fold as well. So there is no support for the claim that Google is somehow depressing the OOXML numbers.

In any case, it is good, from the trend perspective, to have multiple independent data sources, so I’ll start tracking and reporting the Live Search numbers as well. But I don’t have historical data there, so it will take a couple of months before we can see what trend is indicated there.

Are there any other search engines that allow queries based on document type?

“In JTC1 we vote. One country one vote. We do not vote based on a nation’s GDP. Jamaica and Japan are equal in ISO. We have engineers review the standards.”

How in the world is it logical–or just–to give Jamaica and Japan equal standing?

Japan’s population is MUCH larger than Jamaica’s. Its use of document technology is likely disproportionately larger (than population alone would suggest.) And its technical acumen is almost certainly orders of magnitude greater than Jamaica’s.

It seems to me that the people who use standards the most and understand them the best should have a correspondingly larger say in what those standards consist of.

Otherwise you’re setting yourself for all kinds of behind-the-scenes hijinks, with market leaders and wealthier nations buying off poorer ones–which is precisely what happened!

Queen, I stated a fact. That’s the way voting is decided in JTC1. That’s the written rules. So logic has nothing to do with it. There are many examples of decision making processes that are based on per-unit voting rights, independent of population or of economic power. The UN General Assembly, the US Senate and ISO are some notable examples.

Of course, there is nothing stopping someone from creating another standards organization with different voting rules. Rich countries, and rich companies, will make out well in either situation.

It looks like you didn’t include all the Neo-Office variants of Open-Office. It could increase the Open-Office number (very few currently use the X11 Open Office version on Mac OS X).

“Using Live search I find much higher numbers”

You’re going to trust Microsoft’s own search engine? Their old search engine was demonstratively proven to provide Microsoft-biased results, so it wouldn’t be surprising if Live Search does so as well.

Queen Elizabeth,

Things get very problematic very quickly when you start saying that some voters are more equal than others.

One example is that the rich pay more taxes than the poor, but nobody would suggest they deserve more votes.

A more concrete example is that American elections give more weight to votes from members of less populous states. When a president is elected based on a minority of the vote (such as in the current president’s first term), it can be terribly divisive.

Finally, the idea of weighting votes only makes sense if people come to the ISO looking to defend their national interests, rather than trying to find the right technical solution to a problem.

As Martin Bryan recently commented, encouraging people to look at the ISO that way would reduce the quality of its work.

– Andrew

@Queen:

“How in the world is it logical–or just–to give Jamaica and Japan equal standing?”

Because technical standards should be evaluated on technical merits, not socio-, geo- or political factors.

Either you believe that we should introduce political weighting into a technical process, or that Jamaica can’t produce a technical evaluation of same worth as Japan.

Either option is despicable.